# Classical computing modules

import numpy as np

import pandas as pd

import seaborn as sns

from sklearn.neighbors import KNeighborsClassifier

from sklearn.neural_network import MLPClassifier

# Dataset

from sklearn import datasets, preprocessing

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Quantum computing modules

from qiskit import QuantumCircuit, QuantumRegister, ClassicalRegister

from qiskit_aer import AerSimulator

from qiskit.visualization import plot_histogram

from qiskit.quantum_info import StatevectorQuantum Machine Learning

Iris Data Set

# Load the dataset

iris = datasets.load_iris()

feature_labels = iris.feature_names

class_labels = iris.target_names

# Extract the data

X = iris.data

y = np.array([iris.target])

M = 4 # M is the number of features in the data set

# Print the features and classes

print("Features: ", feature_labels)

print("Classes: ", class_labels)Features: ['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)', 'petal width (cm)']

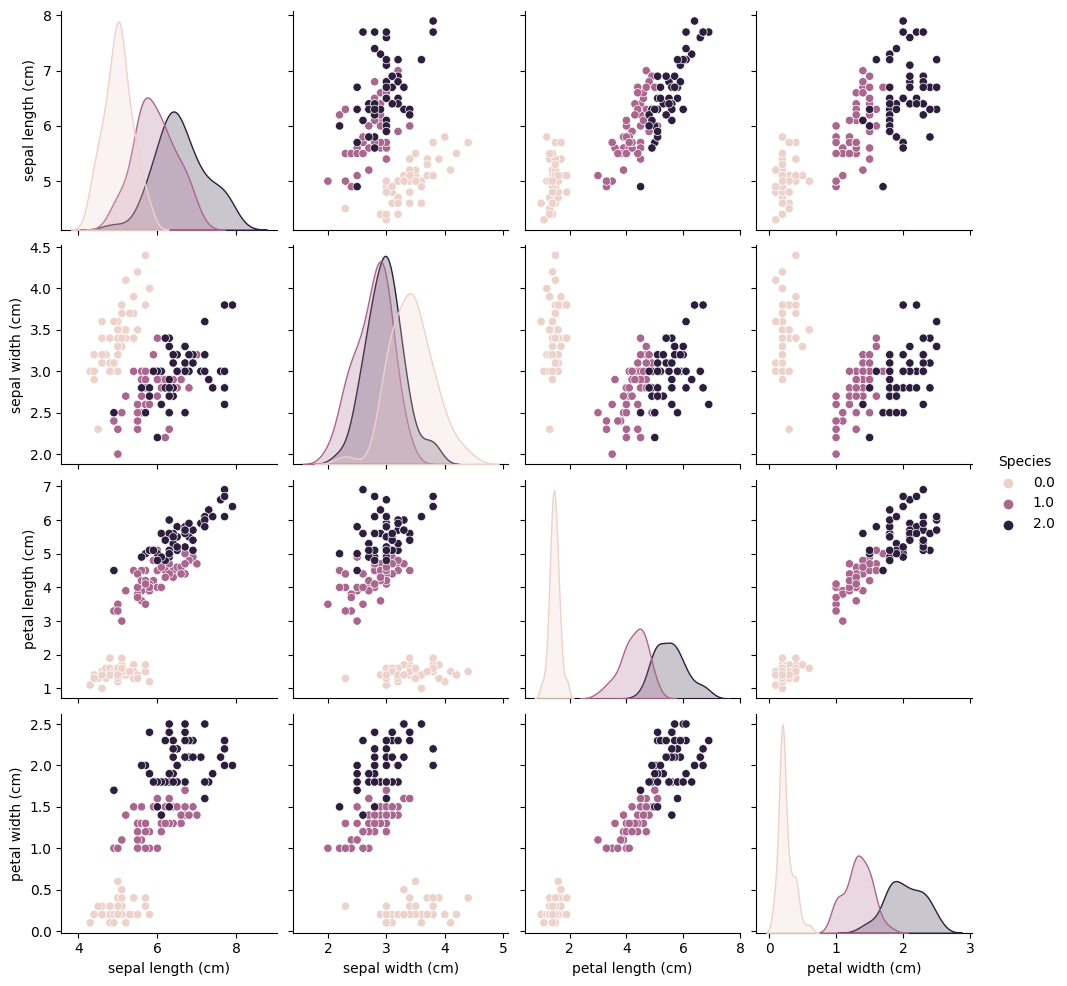

Classes: ['setosa' 'versicolor' 'virginica']df = pd.DataFrame(data = np.concatenate((X, y.T), axis = 1), columns = feature_labels[0:M] + ["Species"])

sns.pairplot(df, hue = 'Species')/Users/butlerju/Library/Python/3.9/lib/python/site-packages/seaborn/axisgrid.py:118: UserWarning: The figure layout has changed to tight

self._figure.tight_layout(*args, **kwargs)

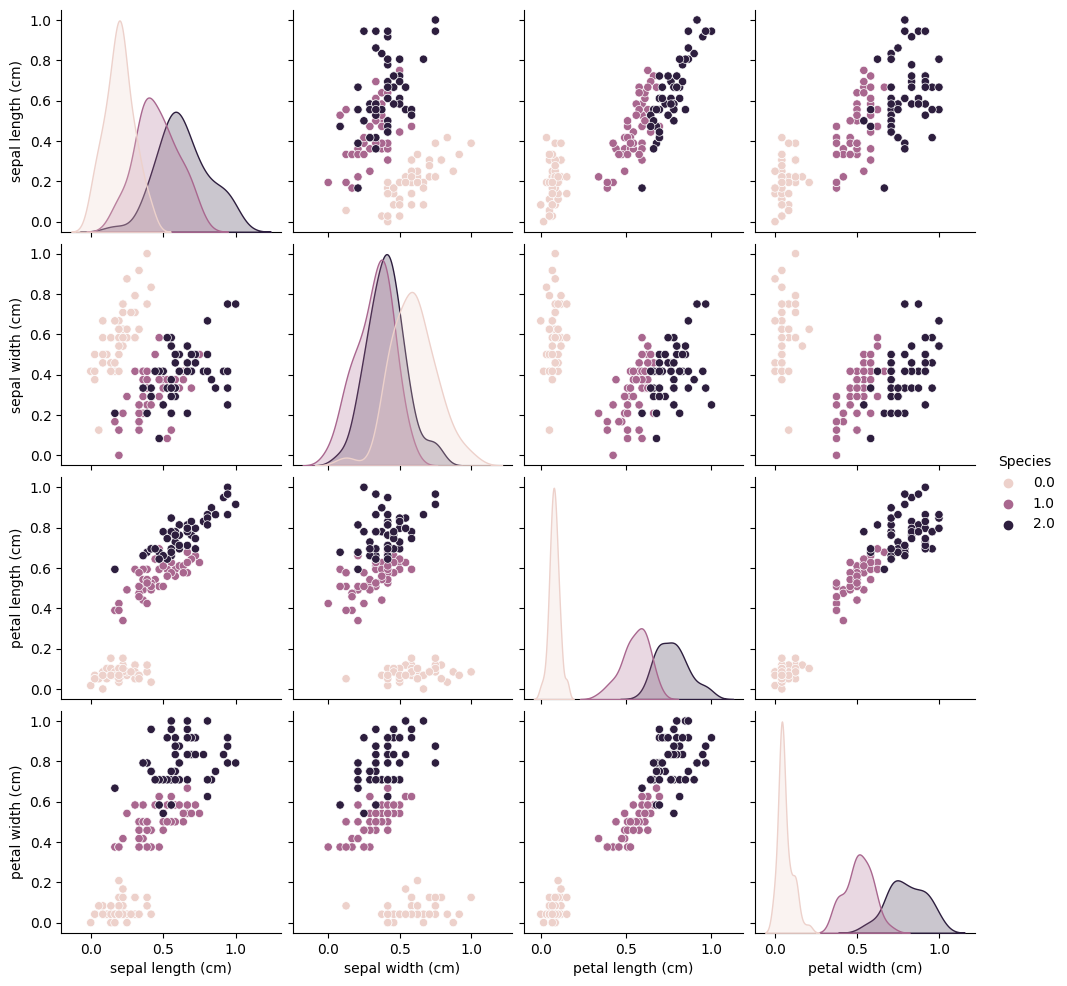

min_max_scaler = preprocessing.MinMaxScaler(feature_range=(0, 1))

min_max_scaler.fit(X)

X_normalized = min_max_scaler.transform(X)normalized_iris = pd.DataFrame(data = np.concatenate((X_normalized, y.T), axis = 1), columns = feature_labels[0:M] + ["Species"])

sns.pairplot(normalized_iris, hue = 'Species')/Users/butlerju/Library/Python/3.9/lib/python/site-packages/seaborn/axisgrid.py:118: UserWarning: The figure layout has changed to tight

self._figure.tight_layout(*args, **kwargs)

K-Nearest Neighbors (Classical)

The following is modified from this code repo.

# First test out kNN with the non-normalized data

N_train = 100 # Training dataset size

X_train, X_test, y_train, y_test = train_test_split(X, y[0], train_size=N_train) # Random splitting

knn = KNeighborsClassifier(n_neighbors = 4)

knn.fit(X_train, y_train)

y_pred = knn.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)*100

print(accuracy)96.0# Now with the normalized data

N_train = 100 # Training dataset size

X_train, X_test, y_train, y_test = train_test_split(X_normalized, y[0], train_size=N_train)

knn = KNeighborsClassifier(n_neighbors = 4)

knn.fit(X_train, y_train)

y_pred = knn.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)*100

print(accuracy)94.0for k in range(1,21):

knn = KNeighborsClassifier(n_neighbors = k)

knn.fit(X_train, y_train)

y_pred = knn.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)*100

print(k, accuracy)1 96.0

2 94.0

3 94.0

4 94.0

5 96.0

6 98.0

7 98.0

8 98.0

9 96.0

10 98.0

11 98.0

12 98.0

13 98.0

14 96.0

15 94.0

16 94.0

17 96.0

18 96.0

19 96.0

20 98.0K-Nearest Neighbors (Quantum)

# Select a sample test sample for classification

# We will run through the algorithm with only a single test

# point to start.

test_index = int(np.random.rand()*len(X_test))

phi_test = X_test[test_index]

print("The test point in classical notation:", phi_test)

# Create the encoded feature vector, it needs to be a single

# vector which is normalized. We are translating classical data to

# quantum form.

# Iterate through each feature

for i in range(M):

# Take a given feature and create a normalized vector of length

# two with the vector

phi_i = [np.sqrt(phi_test[i]), np.sqrt(1- phi_test[i])]

# Create the variable phi_i, which will be the features encoded into

# quantum information. Information is added to the vector with each

# feature using a tensor product.

if i == 0:

phi = phi_i

else:

phi = np.kron(phi_i, phi)

print("The test point in quantum notation is:", phi)The test point in classical notation: [0.72222222 0.5 0.79661017 0.91666667]

The test point in quantum notation is: [0.51351019 0.3184655 0.51351019 0.3184655 0.25947216 0.1609178

0.25947216 0.1609178 0.15482915 0.09602096 0.15482915 0.09602096

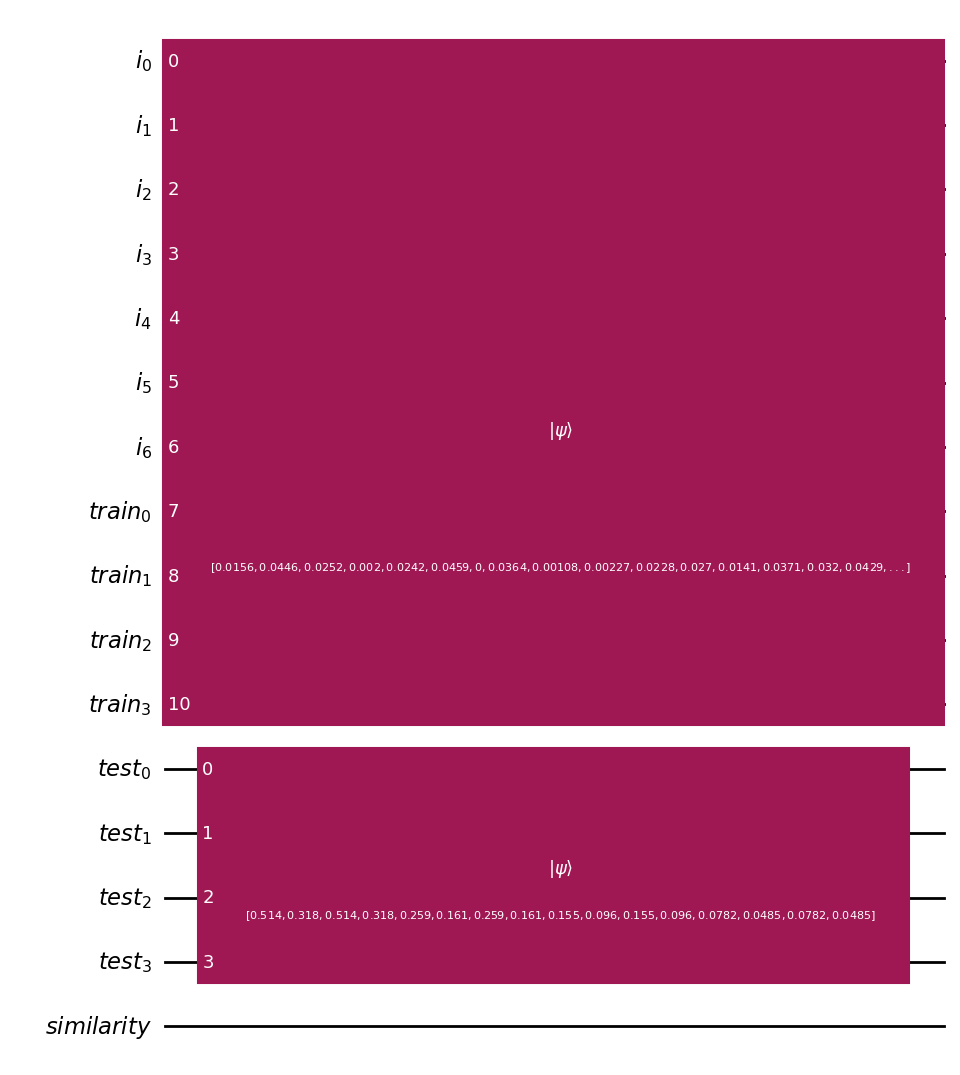

0.0782338 0.04851854 0.0782338 0.04851854]# This cell constructs a quantum state which will encode the training data

# N is based off of the number of training points,

# remember that M is the number of features.

N = int(np.ceil(np.log2(N_train)))

print("N:", N, "2^N:", 2**N)

# We need 2^(M+N) qubits to encode the training data, initilize

# the vector to be all zeros and the correct size

psi = np.zeros(2**(M + N))

# For each training point

for i in range(N_train):

# Encode |i>, which has a one at the index of the number

# of training points

# 2^N is guaranteed to be bigger than N_train

i_vec = np.zeros(2**N)

i_vec[i] = 1

# Encode |x>, the feature vector, using the same process

# we used above to create the test state.

x = X_train[i]

for j in range(M):

x_temp = [np.sqrt(x[j]), np.sqrt(1- x[j])]

if j == 0:

x_vec = x_temp

else:

x_vec = np.kron(x_temp, x_vec)

# The final state for this training point is found with the tensor

# product of |x> and |i>

psi_i = np.kron(x_vec, i_vec)

# Add the contribution from the current training point to the

# state. The goal is to encode information about every single

# training point into psi

psi += psi_i

# Normalize

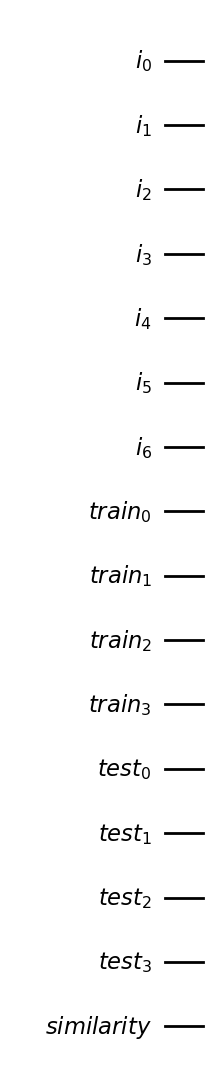

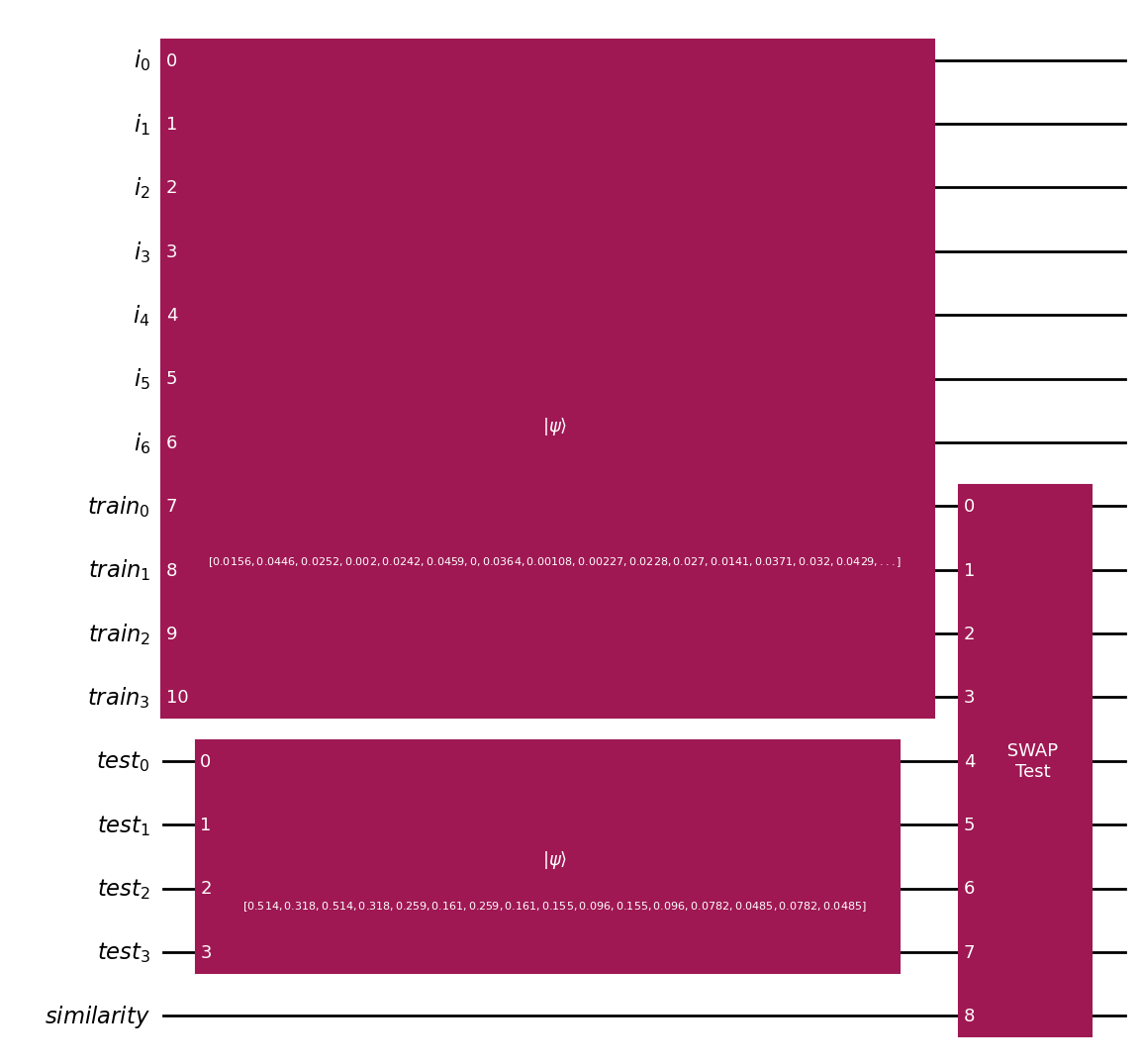

psi /= np.sqrt(N_train)N: 7 2^N: 128# This algorithm is based off of four quantum registers

# that will be combined to form the qubits

# This register handles encoding the indices of the training data

index_reg = QuantumRegister(N, 'i')

# This register holds the quantum representation of the training data

# features

train_reg = QuantumRegister(M, 'train')

# This one holds the test feature vector

test_reg = QuantumRegister(M, 'test')

# This single qubit is used to measure the similarity between

# the test and training points

p = QuantumRegister(1, 'similarity')# Add each of the registers to a quantum circuit

# Create the quantum circuit

qknn = QuantumCircuit()

# Add registers to the circuit

qknn.add_register(index_reg)

qknn.add_register(train_reg)

qknn.add_register(test_reg)

qknn.add_register(p)

# Draw the quantum circuit

qknn.draw('mpl')

# IMPORTANT: 2^n bits of information can be encoded into n qubits

# A 𝑛-qubit state can be represented by a linear combination of the 2𝑛 vectors of

# the standard computational basis. To accurately describe a quantum state over 𝑛

# qubits, you thus need 2𝑛 coefficients (aka amplitudes) which are complex numbers

# in general.

# So index and training registers can encode psi, which as length 2^(M+N)

# and the test register can encode phi, which has length 2^M

# Initialize the training register using the state from above

qknn.initialize(psi, index_reg[0:N] + train_reg[0:M])

# Initialize the test register using the state from above

qknn.initialize(phi, test_reg)

# Draw qknn

qknn.draw('mpl')

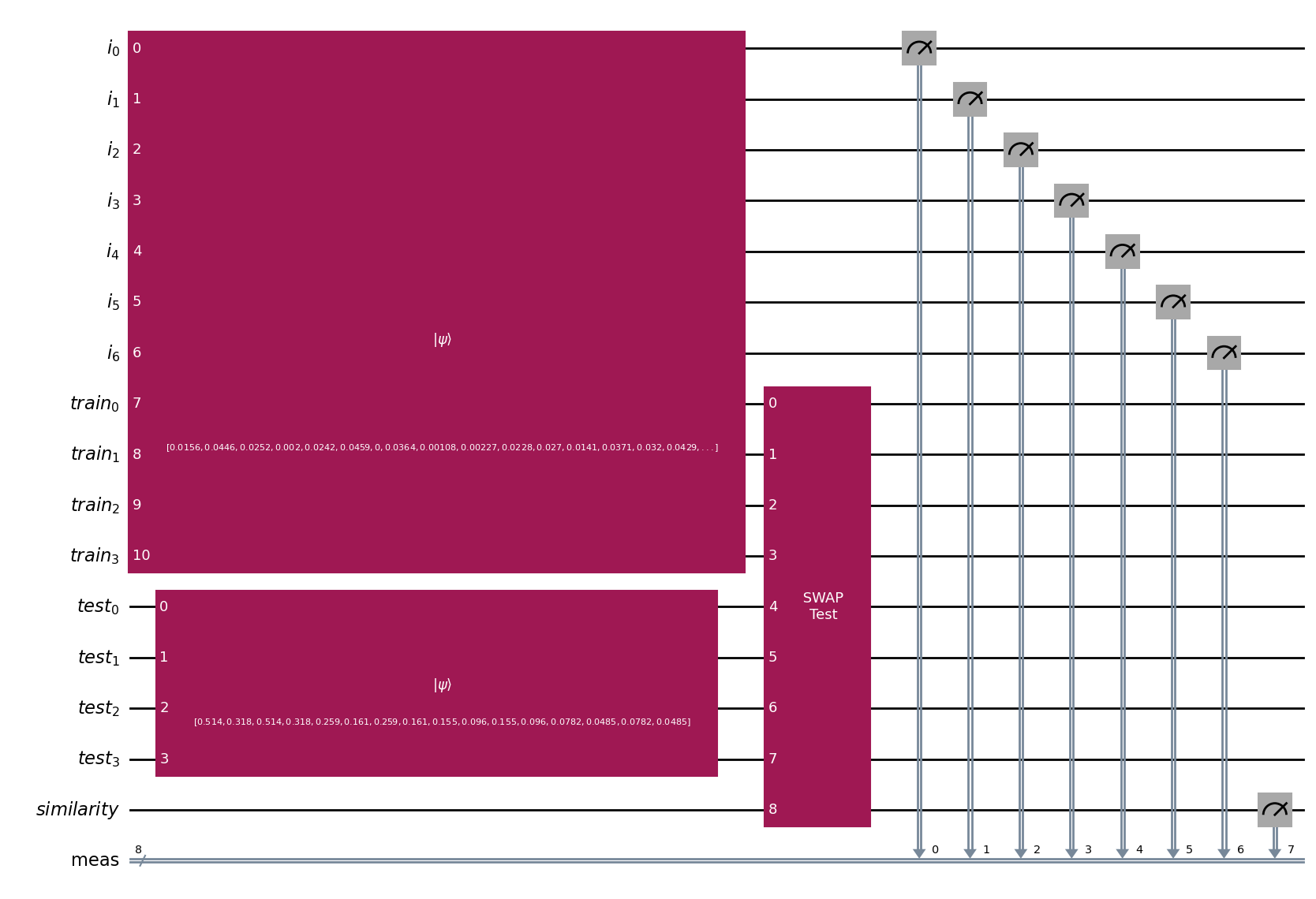

def swap_test(N):

'''

`N`: Number of qubits of the quantum registers.

'''

a = QuantumRegister(N, 'a')

b = QuantumRegister(N, 'b')

d = QuantumRegister(1, 'd')

# Quantum Circuit

qc_swap = QuantumCircuit(name = ' SWAP \nTest')

qc_swap.add_register(a)

qc_swap.add_register(b)

qc_swap.add_register(d)

qc_swap.h(d)

for i in range(N):

qc_swap.cswap(d, a[i], b[i])

qc_swap.h(d)

return qc_swap

# Apply the Quantum SWAP Test module to the quantum circuit

qknn.append(swap_test(M), train_reg[0:M] + test_reg[0:M] + [p[0]])

# Draw qknn

qknn.draw('mpl')

# Create the classical register

meas_reg_len = N + 1

meas_reg = ClassicalRegister(meas_reg_len, 'meas')

qknn.add_register(meas_reg)

# Measure the qubits

qknn.measure(index_reg[0::] + p[0::], meas_reg)

# Draw qknn

qknn.draw('mpl', fold = -1)

from qiskit import transpile

simulator = AerSimulator()

t_qknn = transpile(qknn, simulator)

counts_knn = simulator.run(t_qknn).result().get_counts()/Users/butlerju/Library/Python/3.9/lib/python/site-packages/urllib3/__init__.py:35: NotOpenSSLWarning: urllib3 v2 only supports OpenSSL 1.1.1+, currently the 'ssl' module is compiled with 'LibreSSL 2.8.3'. See: https://github.com/urllib3/urllib3/issues/3020

warnings.warn(result_arr = np.zeros((N_train, 3))

for count in counts_knn:

i_dec = int(count[1::], 2)

phase = int(count[0], 2)

if phase == 0:

result_arr[i_dec, 0] += counts_knn[count]

else:

result_arr[i_dec, 1] += counts_knn[count]for i in range(N_train):

prob_1 = result_arr[i][1]/(result_arr[i][0] + result_arr[i][1])

result_arr[i][2] = 1 - 2*prob_1# Find the indexes of minimum distance

import scipy

k = 10

k_min_dist_arr = result_arr[:, 2].argsort()[::-1][:k]

# Determine the class of the test sample

y_pred = scipy.stats.mode(y_train[k_min_dist_arr])[0]

y_exp = y_test[test_index]

print('Predicted class of the test sample is {}.'.format(y_pred))

print('Expected class of the test sample is {}.'.format(y_exp))Predicted class of the test sample is 2.

Expected class of the test sample is 2.Neural Networks (Classical)

# Load the dataset

iris = datasets.load_iris()

feature_labels = iris.feature_names

class_labels = iris.target_names

# Extract the data

X = iris.data

y = np.array([iris.target])

print(type(y))

M = 4 # M is the number of features in the data set

# Print the features and classes

print("Features: ", feature_labels)

print("Classes: ", class_labels)<class 'numpy.ndarray'>

Features: ['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)', 'petal width (cm)']

Classes: ['setosa' 'versicolor' 'virginica']min_max_scaler = preprocessing.MinMaxScaler(feature_range=(0, 1))

min_max_scaler.fit(X)

X_normalized = min_max_scaler.transform(X)N_train = 100

X_train, X_test, y_train, y_test = train_test_split(X, y[0],

train_size=N_train)# hidden_layer_sizes: tuple describing the number of neurons in each hidden

# layer

# activation: the activation function; ‘identity’, ‘logistic’, ‘tanh’, ‘relu’

# solver: the optimizer; ‘lbfgs’, ‘sgd’, ‘adam’

# max_iter: the maximum number of training iterations the neural network will

# take to train

# tol: if the change in loss between one iteration and the next is less than

# the tolerance, stop training (early stopping)

# Typically the loss function is also a hyperparameter, but is set here

# (scikit-learn is the most restrictive nn implementation)

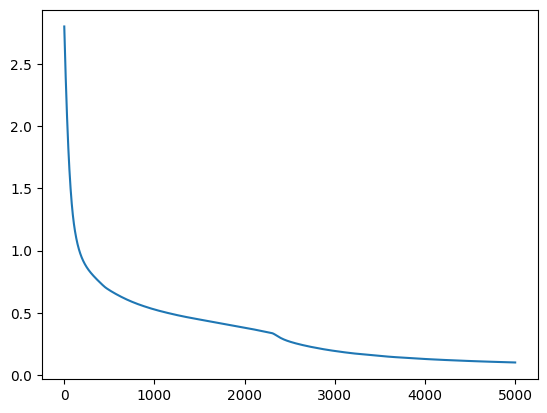

nn = MLPClassifier(hidden_layer_sizes=(4, 3, 2), activation='relu',

solver='adam', max_iter=5000, tol=0.000001)

nn.fit(X_train, y_train)

y_pred = nn.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)*100

print(accuracy)96.0/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(import matplotlib.pyplot as plt

loss_curve = nn.loss_curve_

plt.plot(range(len(loss_curve)), loss_curve)

hidden_layers = [(100), (10,10,10,10), (4,3,2), (5)]

activation = ['identity', 'logistic', 'tanh', 'relu']

max_iter = [100, 500, 1000, 5000]

best_accuracy = 0

best_params = []

for hl in hidden_layers:

for a in activation:

for mi in max_iter:

nn = MLPClassifier(hidden_layer_sizes=hl,

activation=a, solver='adam',

max_iter=mi, tol=0.000001)

nn.fit(X_train, y_train)

y_pred = nn.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)*100

if accuracy > best_accuracy:

best_accuracy = accuracy

best_params = [hl, a, mi]

print("Best Accuracy:", best_accuracy)

print("Best Hyperparameters:", best_params)/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (500) reached and the optimization hasn't converged yet.

warnings.warn(

/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (1000) reached and the optimization hasn't converged yet.

warnings.warn(Best Accuracy: 100.0

Best Hyperparameters: [100, 'tanh', 100]/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (5000) reached and the optimization hasn't converged yet.

warnings.warn(nn = MLPClassifier(hidden_layer_sizes=best_params[0],

activation=best_params[1], solver='adam',

max_iter=best_params[2], tol=0.000001)

nn.fit(X_train, y_train)

y_pred = nn.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)*100

print(accuracy)100.0/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(X_train, X_test, y_train, y_test = train_test_split(X, y[0],

train_size=N_train)

nn = MLPClassifier(hidden_layer_sizes=best_params[0],

activation=best_params[1], solver='adam',

max_iter=best_params[2], tol=0.000001)

nn.fit(X_train, y_train)

y_pred = nn.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)*100

print(accuracy)100.0/Users/butlerju/Library/Python/3.9/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:691: ConvergenceWarning: Stochastic Optimizer: Maximum iterations (100) reached and the optimization hasn't converged yet.

warnings.warn(Quantum Neural Network Classifier

The below code is modified from the Qiskit Machine Learning tutorial on the variational quantum classifier.

The Variational Quantum Classifier (VQC) is a variational algorithm like the variational quantum eigensolver (VQE). It is a hybrid algorithm that computes its value with a quantum circuit, using an ansatz contain optimizable parameters. The parameters are optimized over several iterations using a classical optimizer.

!pip install qiskit_algorithms

!pip install qiskit_machine_learningDefaulting to user installation because normal site-packages is not writeable

Requirement already satisfied: qiskit_algorithms in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (0.3.1)

Requirement already satisfied: qiskit>=0.44 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_algorithms) (1.0.2)

Requirement already satisfied: scipy>=1.4 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_algorithms) (1.11.2)

Requirement already satisfied: numpy>=1.17 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_algorithms) (1.25.2)

Requirement already satisfied: rustworkx>=0.14.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=0.44->qiskit_algorithms) (0.14.2)

Requirement already satisfied: sympy>=1.3 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=0.44->qiskit_algorithms) (1.12)

Requirement already satisfied: dill>=0.3 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=0.44->qiskit_algorithms) (0.3.8)

Requirement already satisfied: python-dateutil>=2.8.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=0.44->qiskit_algorithms) (2.8.2)

Requirement already satisfied: stevedore>=3.0.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=0.44->qiskit_algorithms) (5.2.0)

Requirement already satisfied: typing-extensions in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=0.44->qiskit_algorithms) (4.7.1)

Requirement already satisfied: symengine>=0.11 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=0.44->qiskit_algorithms) (0.11.0)

Requirement already satisfied: six>=1.5 in /Library/Developer/CommandLineTools/Library/Frameworks/Python3.framework/Versions/3.9/lib/python3.9/site-packages (from python-dateutil>=2.8.0->qiskit>=0.44->qiskit_algorithms) (1.15.0)

Requirement already satisfied: pbr!=2.1.0,>=2.0.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from stevedore>=3.0.0->qiskit>=0.44->qiskit_algorithms) (6.0.0)

Requirement already satisfied: mpmath>=0.19 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from sympy>=1.3->qiskit>=0.44->qiskit_algorithms) (1.3.0)

[notice] A new release of pip is available: 23.2.1 -> 24.3.1

[notice] To update, run: /Library/Developer/CommandLineTools/usr/bin/python3 -m pip install --upgrade pip

Defaulting to user installation because normal site-packages is not writeable

Requirement already satisfied: qiskit_machine_learning in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (0.8.0)

Requirement already satisfied: qiskit>=1.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_machine_learning) (1.0.2)

Requirement already satisfied: scipy>=1.4 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_machine_learning) (1.11.2)

Requirement already satisfied: numpy>=1.17 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_machine_learning) (1.25.2)

Requirement already satisfied: psutil>=5 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_machine_learning) (5.9.5)

Requirement already satisfied: scikit-learn>=1.2.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_machine_learning) (1.3.0)

Requirement already satisfied: fastdtw in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_machine_learning) (0.3.4)

Requirement already satisfied: setuptools>=40.1.0 in /Library/Developer/CommandLineTools/Library/Frameworks/Python3.framework/Versions/3.9/lib/python3.9/site-packages (from qiskit_machine_learning) (58.0.4)

Requirement already satisfied: dill>=0.3.4 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit_machine_learning) (0.3.8)

Requirement already satisfied: rustworkx>=0.14.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=1.0->qiskit_machine_learning) (0.14.2)

Requirement already satisfied: sympy>=1.3 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=1.0->qiskit_machine_learning) (1.12)

Requirement already satisfied: python-dateutil>=2.8.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=1.0->qiskit_machine_learning) (2.8.2)

Requirement already satisfied: stevedore>=3.0.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=1.0->qiskit_machine_learning) (5.2.0)

Requirement already satisfied: typing-extensions in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=1.0->qiskit_machine_learning) (4.7.1)

Requirement already satisfied: symengine>=0.11 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from qiskit>=1.0->qiskit_machine_learning) (0.11.0)

Requirement already satisfied: joblib>=1.1.1 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from scikit-learn>=1.2.0->qiskit_machine_learning) (1.3.2)

Requirement already satisfied: threadpoolctl>=2.0.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from scikit-learn>=1.2.0->qiskit_machine_learning) (3.2.0)

Requirement already satisfied: six>=1.5 in /Library/Developer/CommandLineTools/Library/Frameworks/Python3.framework/Versions/3.9/lib/python3.9/site-packages (from python-dateutil>=2.8.0->qiskit>=1.0->qiskit_machine_learning) (1.15.0)

Requirement already satisfied: pbr!=2.1.0,>=2.0.0 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from stevedore>=3.0.0->qiskit>=1.0->qiskit_machine_learning) (6.0.0)

Requirement already satisfied: mpmath>=0.19 in /Users/butlerju/Library/Python/3.9/lib/python/site-packages (from sympy>=1.3->qiskit>=1.0->qiskit_machine_learning) (1.3.0)

[notice] A new release of pip is available: 23.2.1 -> 24.3.1

[notice] To update, run: /Library/Developer/CommandLineTools/usr/bin/python3 -m pip install --upgrade pipfrom qiskit.circuit.library import ZZFeatureMap

from qiskit.circuit.library import RealAmplitudes

from qiskit_machine_learning.algorithms.classifiers import VQC

from qiskit_algorithms.utils import algorithm_globals

from qiskit_algorithms.optimizers import COBYLA

from matplotlib import pyplot as plt

from IPython.display import clear_output

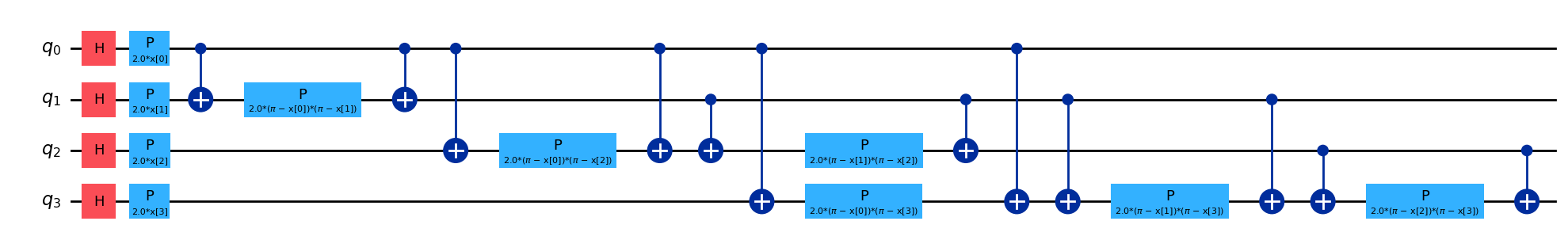

import time# Get the number of features in the training data

num_features = len(X_normalized[0])

# The feauture map will encode the features of the training data. We need

# to specify the number of features in the training data and apply it to

# the circuit once. Note the values x[0], x[1], x[2], and x[3]. These will

# be filled with the feature's once the neural network is run.

# This acts as the input layer in a classical neural network. Taking in the

# data and preparing it to be passed to the rest of the network. This feature

# map will appear first in the algorithm.

feature_map = ZZFeatureMap(feature_dimension=num_features, reps=1)

feature_map.decompose().draw(output="mpl", fold=-1)

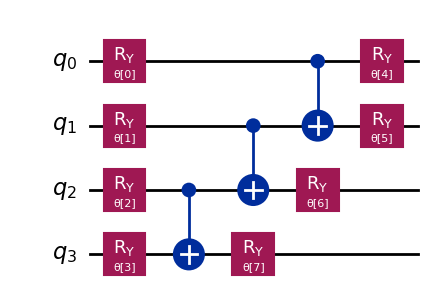

# The real amplitudes function will create a parameterized circuit that will

# act like the hidden layers of our network. We specify the number of qubits

# in the circuit to be the number of features in the training data. We will

# start the circuit with one repetition, giving us 8 parameters (theta[0] -

# theta[7]) to optimize when training the neural network.

# Phrased another way, in the language of variational quantum algorithms, this

# funciton is the ansantz of our quantum algorithm and its values theta willl

# be optimized.

# Note that the number of reps can in increased here to increase the number of

# trainable parameters (similar to increasing the number of neurons in a

# classical neural network). However, the number of reps in the feature map must

# remain at 1.

ansatz = RealAmplitudes(num_qubits=num_features, reps=1)

ansatz.decompose().draw(output="mpl")

# Define the list which will hold the values of the loss function

# every iteration

loss_func_vals = []

# This function will run with every training iteration of the quantum neural

# network. The function must take the current weights of the model (the values

# of theta in the real amplitudes function) and the current value of the loss

# function. This particular function creates a training iteration vs. loss

# graph to see the value of the loss function as the network trains but in

# principle this function can do anything.

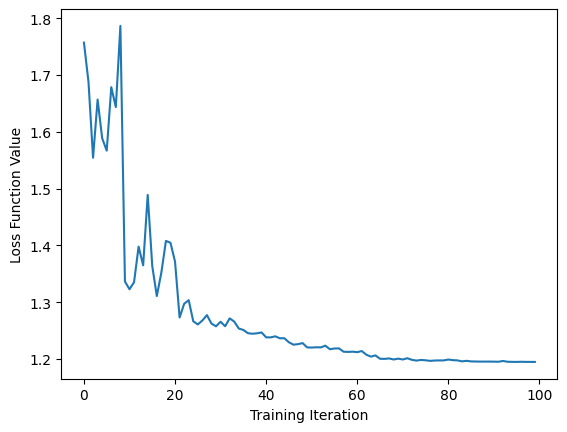

def callback_graph(weights, loss_func_current):

clear_output(wait=True)

loss_func_vals.append(loss_func_current)

plt.xlabel("Training Iteration")

plt.ylabel("Loss Function Value")

plt.plot(range(len(loss_func_vals)), loss_func_vals)

plt.show()

# Define the optimizer that will be used to find the optimized values of

# theta.

# maxiter is the number of training iterations the network will have.

# This can be increased or decreased.

optimizer = COBYLA(maxiter=100)

# VQC stands for “variational quantum classifier.” It takes a feature

# map and an ansatz and constructs a quantum neural network

# automatically. We also need to pass the optimizer and the callback

# function (this last one is optional). The loss function by default is

# the cross entropy function, which is pretty standard for

# classifications.

vqc = VQC(

feature_map=feature_map,

ansatz=ansatz,

optimizer=optimizer,

callback=callback_graph,

)

# Split the data into a training and test set, making sure to use

# the normalized data

X_train, X_test, y_train, y_test = train_test_split(X_normalized,

y[0], train_size=N_train)

# clear loss value history

loss_func_vals = []

# Train the variational quantum classifier while also timing the process.

# Note that the time does go down if you remove the callback function from

# the initialization. Creating many graphs does take some time.

start = time.time()

vqc.fit(X_train, y_train)

elapsed = time.time() - start

# Print the total time needed to train the network

print(f"Training time: {round(elapsed)} seconds")

Training time: 39 seconds# Compute the accuracy with the training data and the test data (aka

# data it knows and data it has never seen before). Print the scores

# after.

train_score_q4 = vqc.score(X_train, y_train)

test_score_q4 = vqc.score(X_test, y_test)

print(f"Quantum VQC on the training dataset:",

np.round(train_score_q4*100))

print(f"Quantum VQC on the test dataset:",

np.round(test_score_q4*100))Quantum VQC on the training dataset: 71.0

Quantum VQC on the test dataset: 68.0